It costs about $200 to run a radio all day. This includes the cost of electricity, as well as the cost of maintenance and repairs. The average person listens to the radio for about four hours a day, which would be the equivalent of running your car for four hours a day.

If you’ve ever wondered how much it costs to keep a radio station on the air all day, wonder no more! Here’s a breakdown of the typical costs associated with running a commercial radio station:

| Electricity | The average radio station uses about $200 worth of electricity per day. |

| Programming | Most stations pay for their programming, which can cost anywhere from $100 to $1,000 daily. In addition, many stations also pay for music royalties. |

| Employees | A typical radio station has a staff of about 10 people, which can cost upwards of $500 per day in salaries and benefits. |

| Rent or Mortgage | Radio stations typically lease their space, costing around $2,000 per month. |

Note

All told, it costs an average of about $3,800 per day to keep a commercial radio station on the air. Of course, some variable costs can impact this figure (such as advertising revenue), but this should give you a general idea of the expenses involved in running a radio station.

Does a TV Use More Electricity Than a Radio?

When it comes to electricity usage, there are a lot of factors to consider. The size of the appliance, the power settings, and use of it all play a role. So, does a TV use more electricity than a radio?

- In general, yes, TVs use more electricity than radios. This is because they have larger screens and require more power to operate.

- Additionally, many people keep their TVs on for extended periods, increasing energy consumption.

So, How to Save More Electricity Bill?

If you’re looking to save on your electricity bill, turning off your TV when you’re not using it is a good place to start. Unplugging appliances when they’re not in use can also help reduce energy consumption. You can make a big difference in your overall energy usage by making small changes like these!

How Much Electricity Does a Radio Use Per Hour?

Your radio probably doesn’t use much electricity. In fact, most radios only use a few watts of power. That means that even if you left your radio on for 24 hours, it would only use about 60 watt-hours of electricity.

To put that in perspective, a typical light bulb uses about 60 watts of power. So if you left your radio on for an entire day, it would only use as much electricity as a single lightbulb. Of course, radios come in all shapes and sizes.

Some are more energy efficient than others. And some people listen to their radios more often than others. So your mileage may vary regarding how much electricity your radio uses per hour.

How Much Does It Cost to Run a TV All Day?

How much does it cost to run a TV all day? It really depends on the TV and how much electricity it uses. The average LED TV uses about 11 watts of power, which means it would cost about $0.01 to run for an hour.

So, running your TV 8 hours a day would cost about $0.08 per day or $2.40 per month.

How Much Does It Cost to Run a TV Per Month?

According to the U.S. Energy Information Administration, the average American household spends about $103 a month on electricity. That number could be even higher if you’re one of those who can’t stand to miss their favorite shows. How much your TV costs to run depends largely on its size and how often you use it.

A small, energy-efficient LED TV that’s used for just a couple of hours a day will cost less than $10 a month to power, while a larger plasma TV that’s left on all day could cost more than $30. Here’s a breakdown of how much it costs to run various types of TVs for different amounts of time each day:

| TV size | Cost per 2 hour | Cost per 4 hour | Cost per 8 hour |

| LED TV (32 inches) | $0.73 per month | $1.45 per month | $2.89 per month |

| LCD TV (42 inches): | $1.19 per month | $1.19 per month | $2.89 per month |

| Plasma TV (50 inches | $1.49per month | $2..98per hour |

How Much Does 1 Watt of Electricity Cost?

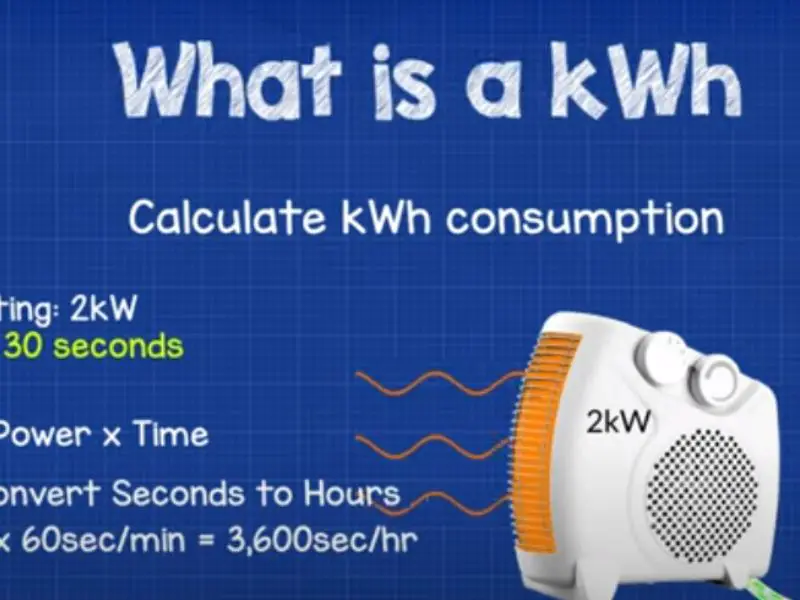

How Much Does 1 Watt of Electricity Cost? People often say they want to save money on their electric bills but don’t know how. A great place to start is by understanding how much electricity costs.

This article will discuss the cost of 1 watt of electricity. Simply put, the cost of 1 watt of electricity equals the price you pay per kilowatt-hour (kWh) multiplied by the number of watts used.

For example, if you pay $0.12 per kWh and use 1000 watts for an hour, your cost would be $0.12 x 1000 = $120.00.

This is just a basic equation and doesn’t include any additional fees or taxes that may apply to your area.

Now that we have covered the basics let’s take a more in-depth look at how electricity rates are determined and how they vary from place to place.

There are Additional Charges

You first need to understand that there are two types of charges associated with your electric bill: demand charges and energy charges. Demand charges are a set fee based on the highest amount of power you use during a certain period, typically 15 or 30 minutes.

The Bill Depends on the Total Used Power

On the other hand, energy charges are based on the total power used over time – usually an entire month. So if you use 1000 watts for an hour at night when there is less demand for power, your overall monthly usage will be lower than someone who uses 1000 watts during peak hours.

This means that your energy charge will also be lower. But remember that some utilities do not have demand charges while others may have both types of fees. Be sure to check with yours to better understand what goes into pricing your monthly statement.

Price Can Vary on Different Location

Generally, residential customers pay between 9 and 13 cents per kWh, while commercial customers expect rates as low as 5 cents per kWh. These prices can change depending on various factors such as location, time of year, economic conditions, etc.

How Much Electricity Does a TV Use Per Hour?

How much electricity does a TV use? This is a question we often get asked, depending on your size and type of TV. For example, an old cathode ray tube TV can use as much as 400 watts of power, while a newer LCD TV uses around 60 watts.

So, how can you calculate your own usage? Follow the step-by-step guideline to find out:

| First step | The first step is to determine your TV’s power rating in watts. You can usually find this information in the user manual or on the back of the television itself. |

| Second step | Once you have this number, simply multiply it by the number of hours that you use your TV each day. So, if you have a 60-watt LCD TV and watch it for 5 hours daily, your daily electricity usage would be 300 watt hours (60 x 5). To calculate your monthly usage, just multiply this number by 30 days. |

| Third step | And finally, to get your yearly estimate, multiply by 12 months. In this case, that would be 10,950 watt-hours (300 x 30 x 12). Of course, these are estimates since other factors are involved, such as standby mode and screen brightness. |

But hopefully, this gives you a good idea of how much electricity your television is using and some ways that you can reduce consumption.

Which is Cheaper to Run TV Or Radio?

The cost of running a TV or radio station depends on many factors, including the type of equipment used, the size of the market, and the number of hours of operation. However, it is generally cheaper to run a radio station than a TV station. Let’s look at facts that give radio stations an advantage compared to tv stations:

| Radio requires less equipment. | One reason for this is that radio stations require less equipment than TV stations. A typical radio station only needs one or two transmitters, whereas a TV station requires multiple transmitters and antennas. |

| Radio stations require lower power levels. | In addition, radio stations can operate with lower power levels than TV stations, which further reduces their operating costs. |

| Radio has few broadcast regulations. | Another reason radio is generally cheaper to operate than TV is because broadcasters have fewer regulatory requirements. For example, the Federal Communications Commission (FCC) imposes no limits on how much a radio station can spend on advertising or promotions. By contrast, the FCC imposes strict limits on television broadcasters’ ability to engage in certain types of marketing activities. |

| People love the radio more. | Finally, it should be noted that most people still listen to the radio more often than they watch television. |

This fact gives radio stations an advantage in terms of reach and audience size, both of which contribute to lower operating costs.

How Many Watts Does a Clock Use?

How many watts does a clock use? This is a question that we get asked quite often. Like most things in life, the answer is “it depends.”

The type of clock, the age of the clock, and how it is used all play a role in how much power it uses.

- A standard wall clock typically uses between 1-3 watts of power.

- A battery-operated clock will use even less power, as low as 0.1 watts.

- A digital alarm clock can use anywhere from 4-10 watts, depending on its features (like a radio or backlight).

The best way to determine how much power your specific clock uses is to check the manufacturer’s website or look for the wattage rating on the back of the clock.

Frequently Asked Question

Does Radio Take a Lot of Electricity?

No, the radio does not take up a lot of electricity. A typical radio uses only a few watts of power, which is very small compared to other household electronics. Even if you leave your radio on all day, it will only cost you a few cents per day in electricity.

What Uses Most Electricity TV Or Radio?

TVs and radios are both common household appliances that use electricity. But which one uses more electricity? To answer this question, we need to consider two factors: the power consumption of each appliance and the amount of time each is used.

The power consumption of a TV can vary widely depending on the type of TV but is typically between 30 and 200 watts. The radio’s power consumption is usually much lower, between 1 and 20 watts. However, radios are often used for shorter periods of time than TVs.

How Much Power Does a Portable Radio Use?

Portable radios use very little power. The average portable radio uses about 1 watt of power. This is about the same amount of power as a small light bulb.

Does Leaving the TV on Waste Electricity?

Leaving the TV on when you’re not watching it wastes electricity. The average TV uses about 0.5-1 watts of power when turned off and about 30-40 watts when turned on. That means if you leave your TV on for 8 hours a day, it will use 240-320 watt-hours of electricity per day, or about 1 kilowatt-hour (kWh).

If your TV is one of the more efficient models available today and only uses 30 watts when turned on, that’s still over 200 kWh per year – enough to add $20-$30 to your annual electricity bill.

End Note

The cost of running a radio all day can vary depending on the station and the type of equipment they use. The average cost for a small market radio station to run their equipment for 24 hours is about $200. This includes the cost of electricity, which is typically the biggest expense.

For a larger market radio station, the costs can be upwards of $1,000 per day.

You might also like: